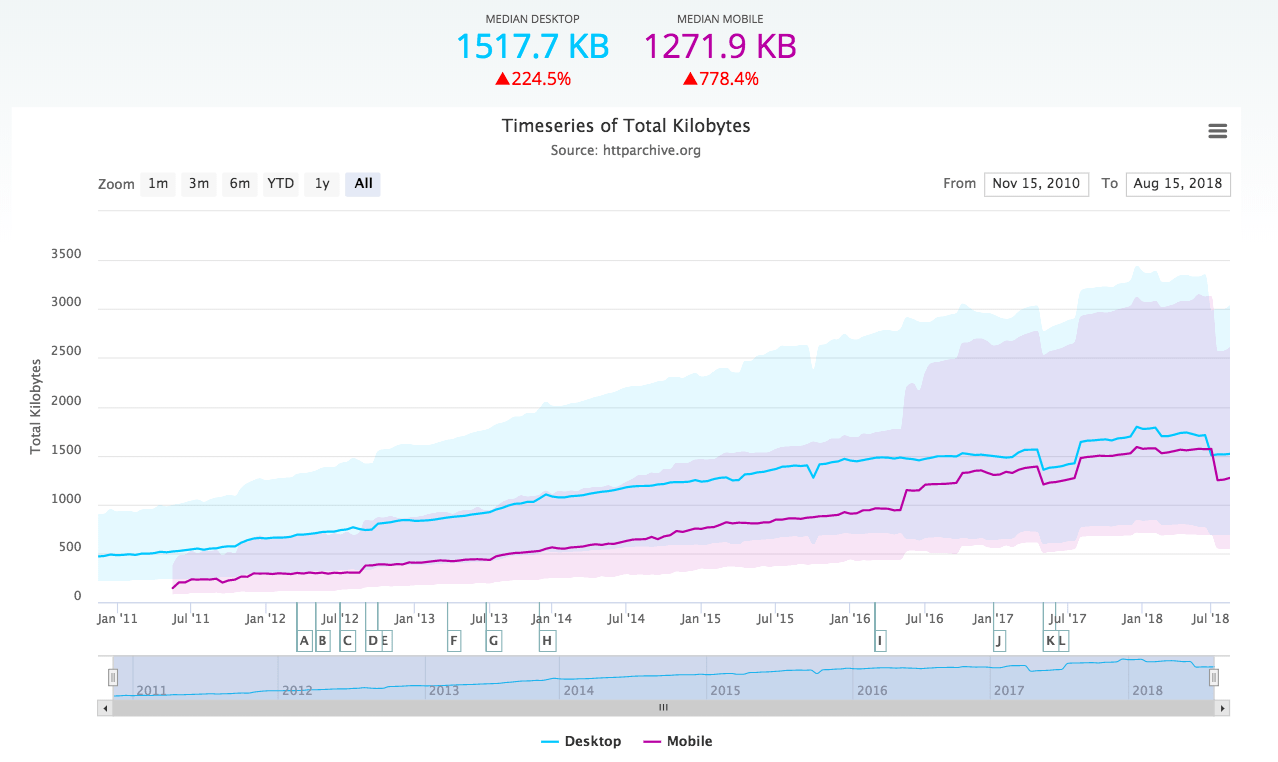

Power consumption continues to be a major cost (and general head ache) for data centers. The latest numbers come from a consulting firm called the Uptime Institute. Even though server hardware energy efficiency has improved, it is being offset by the continuous increase in computing power.

According to the report, computational performance in servers has increased by a factor of 27 from 2000 to 2006. The problem is that server energy efficiency only increased by a factor of 8 during the same period.

So why is that a problem? Well, here is why (quoted from InformationWeek):

Power consumption per computational unit has dropped in the six years by 80%, but at-the-plug consumption has still risen by a factor of 3.4. Contributing to the problem are processor manufacturers, such as Intel, Advanced Micro Devices (AMD), and IBM, packing an increasing number of power-hungry chips into the same-size hardware, which generates more heat that requires increased cooling.

Or, putting it in percent, a 240% increase in data center power consumption.

Of course, it would have been much worse if energy efficiency would have been the same as in 2000. We would have had a 2,600% increase in that case… Now that would have been a problem.

More reading: Servers consuming as much power as color TVs