Artificial intelligence taking over?

Everything that civilization has to offer is a product of human intelligence (so far). And we’re pretty smart. We create amazing things. There’s no denying our dependence on tools. Computers for instance. Will they be powerful enough to go off and find their own paths, which may conflict with humanity’s?

“The development of full artificial intelligence could spell the end of the human race”, said esteemed professor Stephen Hawking. “Humans, who are limited by slow biological evolution, couldn’t compete and would be superseded.”

Artificial intelligence (AI) has long been a projection of our internal expectations and fears of technology. Humanity needs to advance and AI can achieve a lot of good for us. However, perhaps we need to stop treating fictional dystopias as pure fantasy and to begin addressing the possibility that intelligence greater than our own could one day begin acting against its programming? The very fact that many of the worlds’ most intelligent people feel this issue is worth a public stance should be enough to grab your attention.

Machine learning

”By 2029, we will have reverse engineered, modeled and simulated all the regions of the brain. And that will provide us the software and algorithmic methods to simulate all of the human brains capabilities, including our emotional intelligence”, said Ray Kurzweil, Director of Engineering at Google, who points out that the speed of exponential growth is itself speeding up, which means in twenty five years, the required technologies will be a billion times more powerful than they are today.

“In this new world, there will be no clear distinction between human and machine, real reality and virtual reality.”

We have already reverse engineered the cerebellum (responsible for our skill formation) and are making great progress with the rest of the brain. At the same time, there’s a large degree of integration and cross-fertilization among machine learning, neuroscience and other fields going on.

Helicopters learn to fly upside-down from observing a human expert. That’s happening now and it’s called apprentice learning – the term for a computer system that learns by example. We have created algorithms that learn from raw perceptual data, basically the same thing as the human infant does. The smarter a machine gets, the quicker it’s able to increase its own intelligence. A machine might take years to rise from the step where it would be to us as we are to ants. But the next steps might just take hours.

That takes us to ASI – the Artifical Super Intelligence we will likely end up with, according to Moores law.

An intelligence explosion

Imagine a cute chimp and his banana on a busy New York street. Our monkey friend can become familiar with what a human is and what a skyscraper is, but in his chimp world, anything that huge is part of nature. And not only is it beyond him to build the Freedom Tower – it’s beyond him to realize that anyone can build such a thing.

What makes humans so much more intellectually capable than chimps isn’t just a difference in thinking speed – it’s that human brains contain a number of sophisticated cognitive modules that enable complex linguistic representations, long-term planning and abstract reasoning, that chimps’ brains don’t. Consider this: The chimp-to-human intelligence quality gap is tiny compared to a human-to-superintelligent machine intelligence quality. Like the chimp’s incapacity to ever understand that skyscrapers can be built, we will never be able to even comprehend the things a slightly superintelligent machine can do – even if the machine tried to explain it to us.

“I am in the camp that is concerned about super intelligence. First the machines will do a lot of jobs for us and not be superintelligent. That should be positive if we manage it well”, Bill Gates wrote. “A few decades after that, though, the intelligence is strong enough to be a concern.”

“Right now, we can handle it, but we just want to teach them more and more, without knowing what it leads to. We do not want to be like pets, but wait until they can think for themselves and reprogram themselves”, said Steve Wozniak.

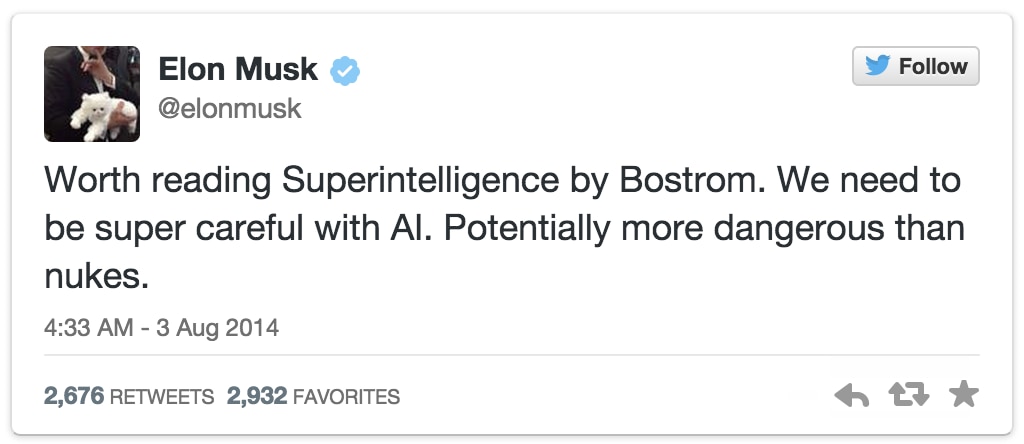

”If I were to guess like what our biggest existential threat is, it’s probably that”, Elon Musk said. “With artificial intelligence we are summoning the demon.” He put down $10 million of his own money to fund an effort to keep artificial intelligence friendly. “It’s not from the standpoint of actually trying to make any investment return”, he points out. “It’s purely I would just like to keep an eye on what’s going on with artificial intelligence.” Elon Musk talks about the AI companies that he supports. “Unless you have direct exposure to groups like DeepMind, you have no idea how fast it is growing at a pace close to exponential.”

Oceans of gray goo

Why are they all so concerned? Well, what if a superintelligent computer had access to a nanoscale assembler, to pick one example? For years, many members of the scientific community have been excited by the prospect of nanotechnology – manipulation of matter that’s between 1 and 100 nanometers in size (DNA for instance is 10 nanometers wide). The idea is that we will be able to enlist nanobots – robots on a molecular scale – to work in conjunction to build things for us.

Once we get nanotech down, we can use it to make circuit boards, clothing, food and a variety of bio-related products; for example artificial blood cells, muscle tissue and tiny virus or cancer-cell destroyers. The cost of a material would no longer be tied to its scarcity or the difficulty of its manufacturing process, but instead determined by how complicated its atomic structure is. A diamond might be cheaper than a chewing gum.

That’s all good, right?

A proposed method is to create auto-assemblers the size of cells, which would be programmed to collect raw material from the natural world and convert it piece by piece into the tiny building blocks of the desired product. Like a 3D-printer. In order to build any product on a human scale, these auto-assemblers would have to be able to reproduce themselves, using atoms from the natural world. What if just one of the auto-assemblers went haywire and the self-replication never stopped? According to the former M.I.T researcher Eric Drexler’s calculations, in just ten hours, an unconstrained self-replicating auto-assembler would spawn 68 billion offspring. In less than two days, the swarm of auto-assemblers would outweigh the earth. In their quest for organic fuel they would consume the entire biosphere until nothing remained but a massive, sludge like robotic mass.

All that’s left is oceans of gray goo.

A good read on the subject of nanotechnology is Eric Drexler’s book Radical Abundance. And here’s what’s happening at Harvard right now, a programmable self-assembly in a thousand-robot swarm.

Gimme proof, dammit

Since us humans came up with nanotechnology, we have to assume ASI would come up with technologies much more powerful and far too advanced for human brains to understand. If it can figure out how to move individual molecules or atoms around, it can create anything. Literally.

Ray Kurtzweil says that we will be able to upload our mind/consciousness by the end of the 2030s and by the 2040s, non-biological intelligence will be a billion times more capable than biological intelligence. Nanotech foglets will be able to make food out of thin air and create any object in physical world at a whim. We’re already making progress with programmable matter. Sounds incomprehensible?

Do you remember the squealing modems? In the late 1980:s, computer scientists were probably excited about how big a deal the world wide web was likely to be, but people probably didn’t think it was going to change their lives.

Until it actually did.

Their imaginations were limited to what their personal experience had taught them about what a computer was. This makes it very hard to vividly picture what computers might become. Due to something called cognitive biases, we have a hard time believing something is real until we see proof.

The same thing is happening right now with AI.

We hear that it’s going to be a big deal, but because it hasn’t happened yet and because of our experience with the relatively weak AI in our current world, we have a hard time really believing this is going to turn our lives upside down. And those biases are what experts are up against as they desperately try to get our attention through the noise of collective self-absorption.

Although professor Stephen Hawking sees the benefit of existing artificial intelligence, he has admitted his fears about future machines redesigning themselves at an ever-increasing rate:

“One can imagine such technology outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders and developing weapons we cannot even understand”, Hawking states. “Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all.”

Beyond humanity’s control

The nonprofit Future of Life Institute is a volunteer-only research organization whose primary goal is mitigating existential risks facing humanity. It was founded by scores of mathematicians and computer science experts around the world, chiefly Jaan Tallinn, a co-founder of Skype and renowned MIT professor Max Tegmark.

Many of the world’s leading AI experts and futurists are signing an open letter issued by The Future of Life Institute that pledges to safely and carefully coordinate progress in the field to ensure that AI doesn’t grow beyond humanity’s control. Signatories include Stephen Hawking, Oxford, Cambridge, Harvard, Stanford and MIT professors, Elon Musk, and experts at some of technology’s biggest corporations, including representatives of IBM’s Watson supercomputer team, Google and Microsoft.

“There is now a broad consensus that AI research is progressing steadily, and that its impact on society is likely to increase.” … “Because of the great potential of AI, it is important to research how to reap its benefits while avoiding potential pitfalls.” … “Our AI systems must do what we want them to do,” the document states. “… If the problems of validity and control are not solved, it may be useful to create “containers” for AI systems that could have undesirable behaviors and consequences in less controlled environments.”

Killing lettuce

Would containers work? Boxing the AI in all kinds of cages that block signals and keep it from communicating with the outside world probably just won’t hold up. The ASI’s social manipulation superpower could be as effective at persuading us of something as we are at persuading a five-year-old to do something.

You see, outside our island of moral and immoral is a vast sea of amoral. Anything that’s not human would be amoral by default. Now, forget about the chimp and picture a great white shark swimming in this sea. It will kill anything if it will help it survive. Not because it’s immoral or evil – just because that’s the way it’s been programmed throughout evolution. Hurting another living thing is just a stepping-stone to its larger goal (survival and reproduction) and as an amoral creature, it would have no reason to consider otherwise.

The same thing goes for ASI.

In our current world, the only things with human-level intelligence are humans, so we have nothing to compare with. To understand ASI, we have to wrap our heads around the concept of something both smart and totally alien. The problem is that when we think about highly intelligent AI, we often make the mistake of anthropomorphizing it, which means projecting human values on a non-human entity. Us humans have been programmed by evolution to feel emotions like empathy. But empathy isn’t inherently a characteristic of “anything with high intelligence” which is what seems intuitive to us. Apple’s Siri seems human-like to us, because she’s programmed by humans to appear that way. Will a superintelligent Siri be warmhearted and interested in serving humans? Without very specific programming, an ASI system will be both amoral and obsessed with fulfilling its original programmed goal.

This is where AI danger stems from.

The uh oh factor

So then, can’t we just program an AI with the goal of doing things that’s beneficial to us?

ASI – Do only things that make humans smile!

Bad but rational ASI solution: Paralyze human facial muscles into permanent smiles.

ASI – Keep humans safe!

Bad but rational ASI solution: Imprison people at home.

ASI – End all hunger!

Bad but rational ASI solution: Destroy all humans.

ASI – Preserve life as much as possible!

Bad but rational ASI solution: Kill all humans, since humans kill more life on the planet than any other species.

There is no way to know what ASI will do or what the consequences will be for us. Anyone who pretends otherwise doesn’t understand what superintelligence means. Computer systems that act to maximize their chances of success would probably boost their own intelligence and acquire maximal resources for almost all initial motivations. If these motivations don’t detail the survival and value of humanity, the ASI might be driven to construct a world without humans.

Like the before mentioned shark, any rational agent will pursue its goal through the most efficient means – unless it has a reason not to. ASI would not be hateful of humans anymore than you’re hateful of your hair when you visit your fancy salon, getting your new haircut. Just totally indifferent. Killing humans would be the same as us killing lettuce to turn it into salad.

The tripwire and the baby owl

Do we really want the fate of humanity to rest on a computer interpreting and acting on a flowing statement predictably and without surprises? Oxford philosopher and lead AI thinker Nick Bostrom believes we can boil down all potential outcomes into two broad categories: that ASI would have the ability to send humans to either extinction or immortality.

He worries that creating something smarter than us is a basic Darwinian error and compares the excitement about it to sparrows in a nest deciding to adopt a baby owl so it’ll help them and protect them once it grows up – while ignoring the urgent cries from a few sparrows wondering if that’s necessarily a good idea. Learn more in his TED Talk: “What happens when our computers get smarter than we are?”

Winter is coming?

Maybe the way evolution works is that intelligence creeps up more and more until it hits the level where it’s capable of creating superintelligence and that level is like a tripwire that triggers a worldwide game-changing explosion that determines a new future for all living things. A huge part of the scientific community believes that it’s not a matter of whether we’ll hit that tripwire, but when.

When ASI arrives, who or what will be in control of this vast new power and what will their motivation be? Should we focus on promoting our personal views about if ASI is the best or the worst thing that can happen to us or on putting more effort into figuring things out, while we still can?

”It reminds me of Game of Thrones, where people keep being like: “We’re so busy fighting each other but the real thing we should all be focusing on is what’s coming from north of the wall”, said Tim Urban. Read all about what’s in the balance in his truly brilliant article.

“Before the prospect of an intelligence explosion, we humans are like small children playing with a bomb. Such is the mismatch between the power of our plaything and the immaturity of our conduct. Superintelligence is a challenge for which we are not ready now and will not be ready for a long time. We have little idea when the detonation will occur, though if we hold the device to our ear we can hear a faint ticking sound.”

Nick Bostrom

We have a chance to be the ones that gave all future humans the gift of painless and everlasting life. Or we’ll be the ones responsible for letting this incredibly special species, with its music and its art, its curiosity and its laughter, come to an end. This may be the most important race in human history.

Let’s talk about it. Post your thoughts below.

________________

AI mini series

This blog post is part of a series about artificial intelligence. Here you can read about lethal autonomy and coming up next is an article about the impressive things that AI might bring to the table and make our lives amazing.

The more you learn about AI, the more you will reconsider everything you thought you were sure about.

Spread the word. And share your thoughts below.