Cost-effective and holistic

Real User Monitoring (RUM) and Synthetic Monitoring are the two predominant end-user monitoring techniques. Leveraging one with the other allows for a more accurate and comprehensive monitoring experience. Here, we’ll show you how incredibly complimentary they are.

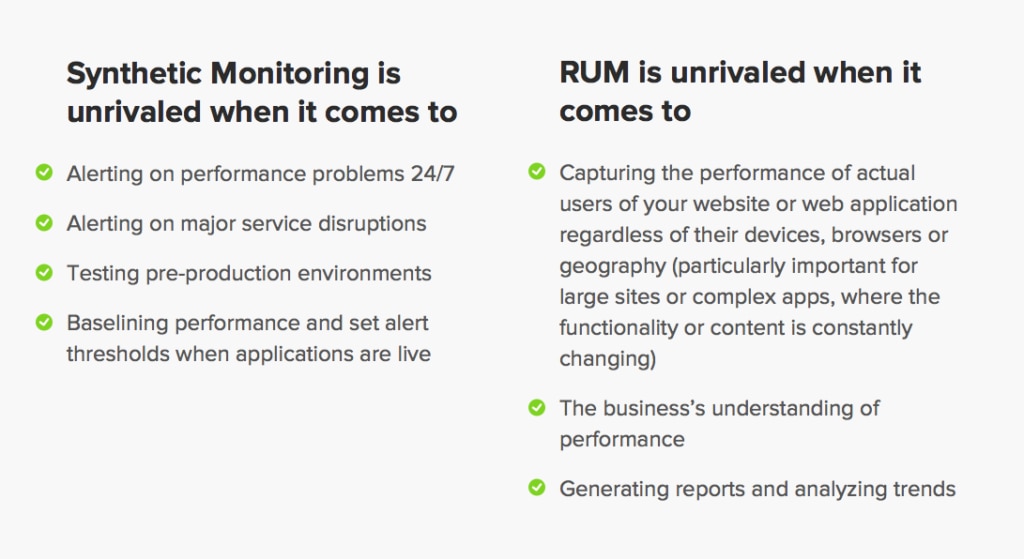

Synthetically generated traffic means a remote and global infrastructure that visits a website periodically and records the performance data for each run. It doesn’t require any installation or code injection on your website to start. If an issue arises, synthetic monitoring provides the insight you need to quickly identify, isolate and resolve problems before they affect users and negatively impact revenue and brand equity.

The primary problem with synthetic monitoring is scaling the scope. For it to be truly valuable you must understand and monitor all high trafficked or mission critical websites and services so a synthetic-only approach is not without its flaws. As its name would suggest, synthetic monitoring does not measure the experience of actual users. Consequently, you will have visibility into the performance of specific pages but left in the dark to performance issues present in other areas.

However, with RUM, a javascript is injected on each page and reports on the page load data for every request that is made for each page. There’s no need to pre-define your important use cases. As visitors goes through the application, all of the performance timings are captured, so no matter what pages they see, performance data will be available for you. Knowing your user trends, such as geographic or channel distribution, helps you better define your business plan and, from a monitoring perspective, allows you to identify key areas on your site that require the most attention.

Synthetic monitoring helps you diagnose and solve shorter-term performance problems. RUM helps you understand long-term trends and synthetic monitoring test results.

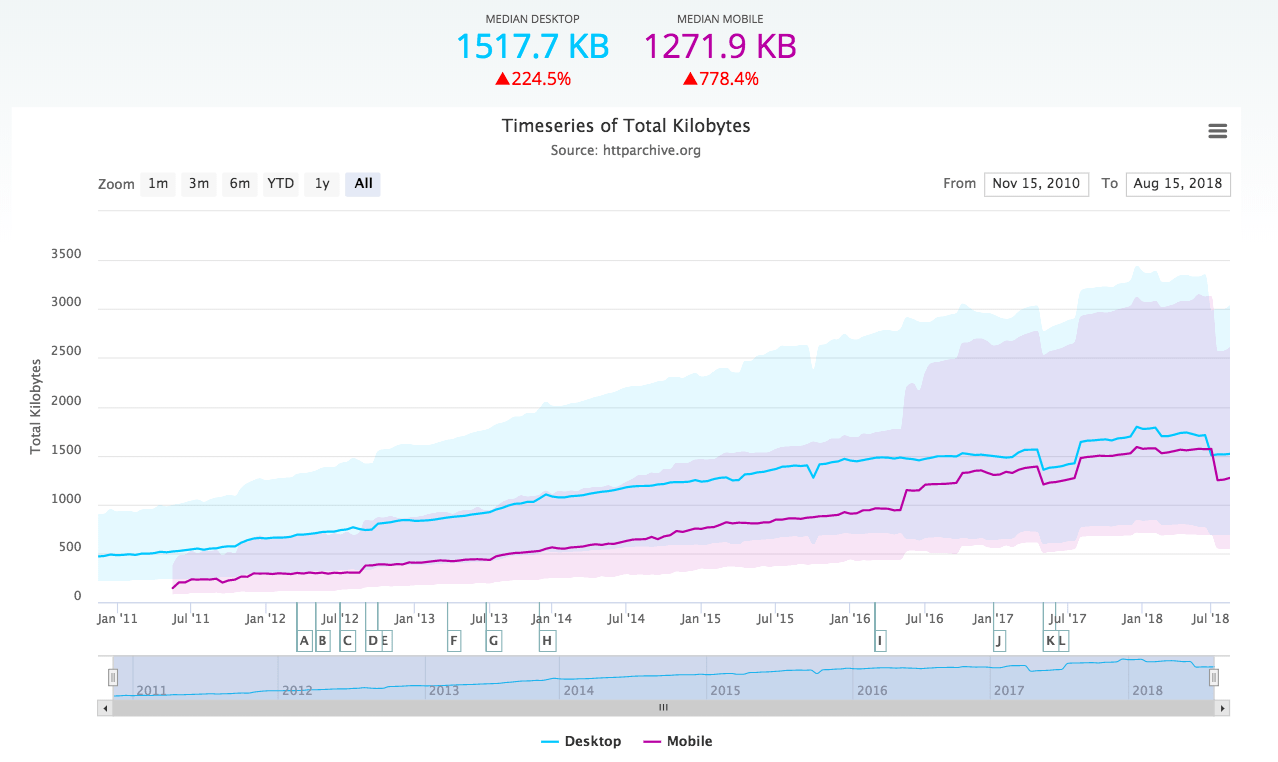

Steve Sauders compares RUM and Synthetic page load times here.

Best practice

Synthetic monitoring allows for you to block out much of the noise that is reported with RUM. Use it to identify latency and downtime promptly and scientifically isolate and diagnose the root cause of erratic performance issues. And use RUM to identify target areas for optimization and then create synthetic tests to monitor these pages from relevant geographic areas and channels moving forward. We’ve written about this in an older post.

With this practice, you ensure full visibility of application usage and performance and understand network or channel distribution and flow of your users. Good luck! And remember what Jack Johnson sings in this song.