Even a few seconds of downtime may have a direct and substantial effect on the company balance sheet, especially for e-commerce. The impact of downtime isn’t solely about lost sales as many visitors are landing on your webpages to conduct research about future purchases. If the website is offline during this research process, the effect may also be severe—your brand will ultimately be much less likely to be considered when the researchers are ready to make a purchase. Website monitoring that helps minimize the impact of degraded web performance is a crucial investment for all companies relying on their online presence.

Website monitoring is often considered just a simple way to get alerted in case of a website outage, or one of the crucial transactions being too slow or unavailable. Bear in mind that what you can gain from monitoring your online assets is not simply real-time alerts. Analyze your data, and it’ll help you detect and solve website issues long before any of your users gets affected.

Unexpected Failures Shouldn’t be Unexpected

Unless your web server experiences a catastrophic and unusual event, such as catching fire, it’s rather unrealistic that it will just “go down.” Most web hosts now use cloud technologies to balance loads and deal with individual server hardware failure automatically on behalf of their customers.

Most website outages result from cumulative errors across many sources that have gone unnoticed; eventually building up to a complete failure. All the signs of imminent failure are typically there if you’ve been monitoring website performance and activity correctly.

It All Begins With Ping and DNS Testing

One of the most basic availability tests—the humble ping—does little more than just tell you if your website is online or not. This is the starting point of all availability testing and should be a standard part of your monitoring toolkit. Checking ping response time (we recommend once per minute) will provide very quick confirmation that the site is reachable.

The same is true of DNS. DNS testing ensures the internet’s “virtual phone book” has the right details for directing traffic to your web server. If the DNS goes out of sync for any reason, visitors will receive connection timed-out errors instead of the page they requested to view. This article tells you more about the mechanics of DNS testing.

When it comes to preventing future website downtime, you should not be so much interested in the up or down response generated by the test. Rather, you need to focus on the time taken to complete each request. And this is where regular tests and historical analysis become so important.

Historical Reporting Reveals Developing Problems

A future outage is often preceded by a period of declining web performance. For example, ping and DNS tests complete, yet response times steadily increase.

In this case, you need to develop a baseline of normal performance against which all present and future tests can be compared. Transient network conditions may mean response times fluctuate and increase temporarily against baseline values. Typically, these types of transient variations aren’t a serious cause for concern. You should also take a look at the real world speed limit of ping to ensure your analysis is consistent and realistic.

However, if the response time continues to increase without returning to baseline levels, you should take this as an early warning that something is wrong with your site, or your ISP’s network configuration. You can then begin the troubleshooting process, looking to correct the underlying issues before the site fails completely.

Going Beyond the Network Layer

An area that reveals problems early, if closely monitored with a database monitoring solution, is SQL query execution. As with ping and DNS testing, the key lies in how long it takes to complete database transactions.

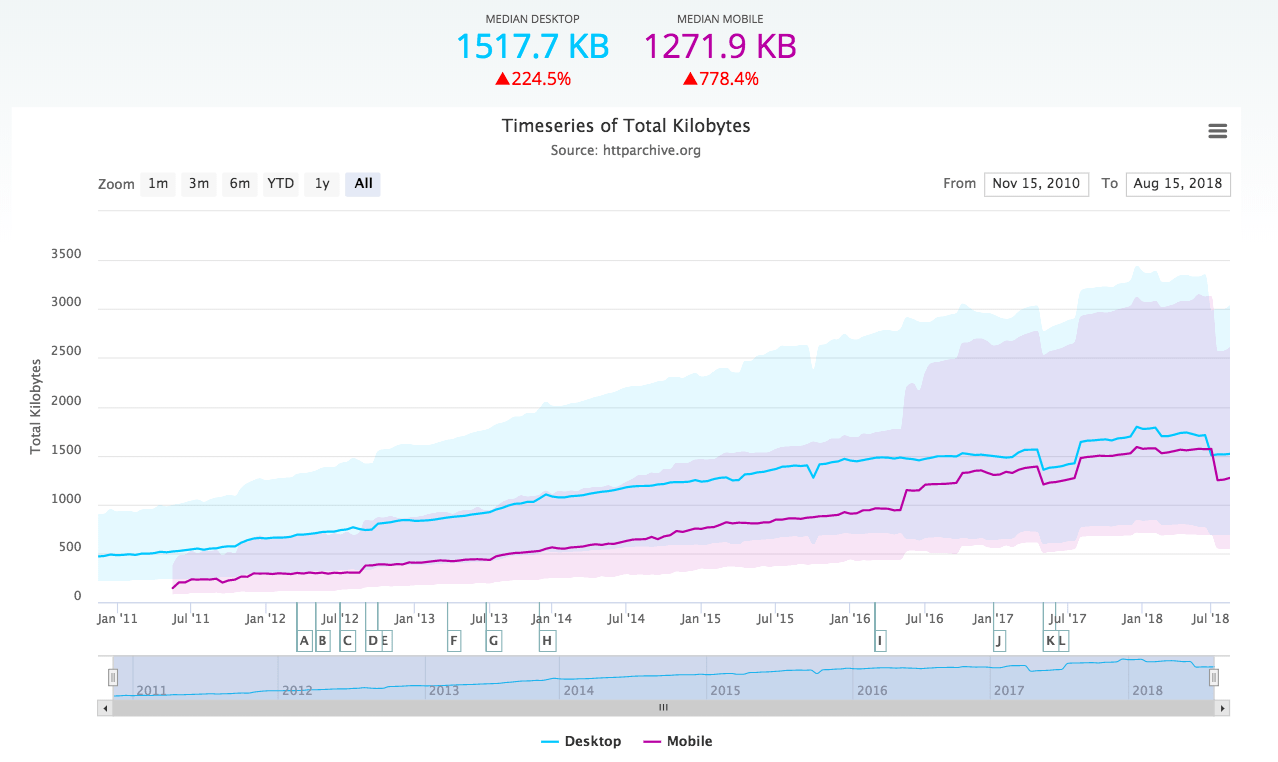

Depending on your website functionality, the database powering the CMS will grow exponentially. Every webpage and visitor comment are stored in the database, as is any other data you collect via the content management system, such as web traffic statistics, content caching, and sales orders.

As the database grows, so too does the time required to complete a database read or write operation. The database also occupies physical disk space which is—in most cases—limited by the operating system on your web server.

If the upper limit for database size is reached, your website may stop responding. In a vanilla installation, the default MySQL database size is set to 256TB. It’s possible to increase it but without additional computing resources, performance may suffer. It’s also highly unlikely your ISP will allocate 256TB for a content management system database. Most hosting packages offer 1 – 2GB as standard, meaning you could easily run out of storage far sooner than you might expect.

Again, testing query execution times, establishing a baseline for normal performance, and routine analysis will be essential to identifying potential problems in advance. Where you notice a continual increase in the time taken to read and write to the database, you know potential problems are building. Even if your site doesn’t fail, investing time and resources into database performance tuning will help to improve overall site performance—and the user experience.

Understanding Web Performance as Seen by Your Users

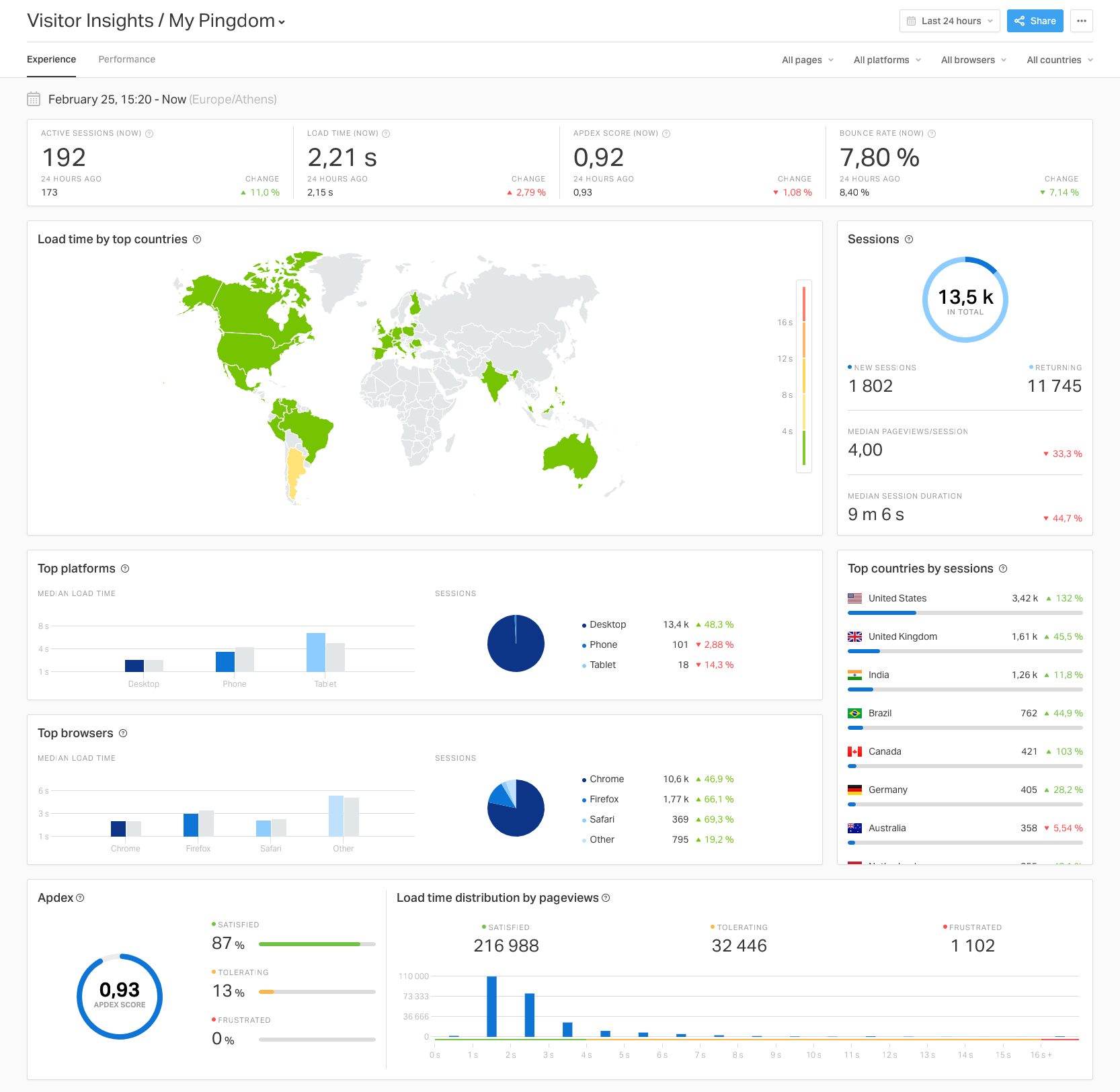

Website monitoring can help you analyze not just how (and if) the web server responds in an ideal “testing” environment. With the real user monitoring (RUM) feature, it’ll also tell you when actual users experience web performance hiccups and let you analyze what page speed is typical depending on different user characteristics. And, at the end of the day, it’s actual users’ experience that really matters above everything else.

RUM gives you a complete overview, where spotting bottlenecks and the weakest aspects of your site’s configuration in real world is a lot easier. It therefore makes sense to look for a monitoring solution that captures and stores information on web performance as seen by your actual visitors, so you can analyze historical data over a longer period. For example, SolarWinds® Pingdom® lets you analyze data in the visitor insights report for up to 400 days (depending on the subscription plan).

Start Monitoring Your Website Today

Although simple website availability testing can reveal immediate problems with your website, ongoing comprehensive monitoring is crucial to identifying long-term issues causing outages. Testing provides a snapshot of current website health. Monitoring creates a baseline of performance, allowing you to identify any deterioration over a longer period.

Intermittent errors are particularly problematic because they are not easy to spot. It is only with a baseline days and weeks’ worth of test results that these issues become apparent. Using historical trends, you can find the issue and narrow down the potential causes before any user is affected by an outage.

If you’re looking to get started with analyzing web performance data, check out this guide on how to choose the right website monitoring tool. And when you’re ready, be sure to sign up for a free Pingdom trial and see what’s possible with a website monitoring solution.