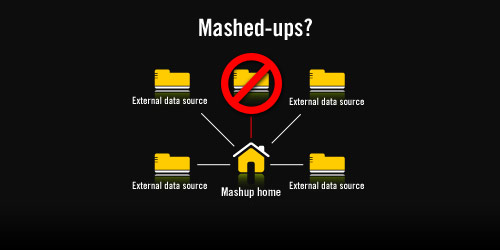

The term “mash-up” goes hand in hand with Web 2.0. It refers to a website that combines content from several different sources and mashes it up into something new. Google, Yahoo, eBay, Amazon, and many others are providing access to their data through Web service APIs. The upside is obvious. Being able to pull in data from lots of different services gives you a huge toolbox for developing interesting, fun, useful, or just plain weird online applications and websites.

For a look into the creativity this has unleashed, look no further than John Musser’s Programmable Web, or go directly to his list of the most popular mash-ups.

We love mash-ups. They are making the Web a much more interesting place. However, such a large and complex beast as the Internet doesn’t always behave well, and mash-up creators need to be prepared for that.

Losing the “up” in mash-up

So are there really downsides to this mash-up phenomenon? Yes, even if you discount the yet-another-Google-Maps-mash-up syndrome, there is.

The part that makes a mash-up different from a regular website is that there are more links in the chain that can break. Every external Web service you add to your mash-up will increase the risk of your website not working.

Show me the numbers

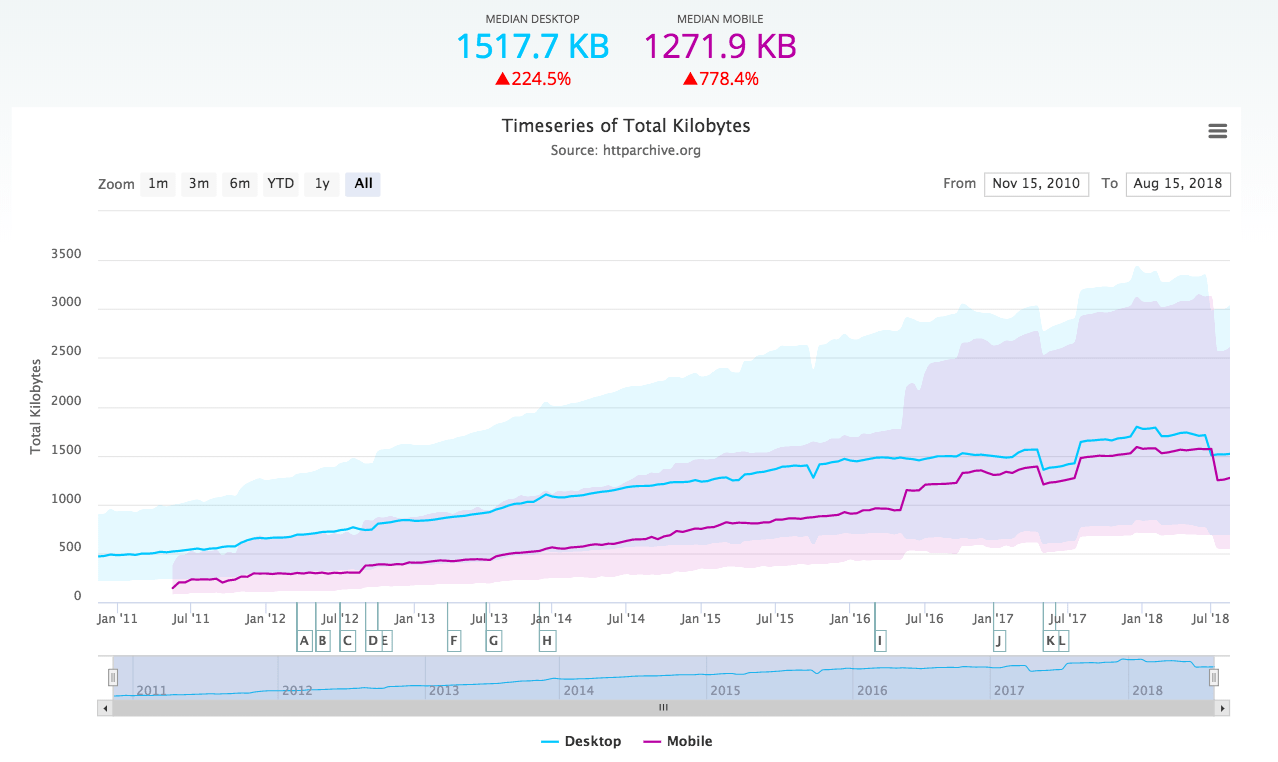

Say your web host delivers 99.9 percent uptime. That means your website will be unavailable around 43 minutes per month, which is, to be honest, better than what most hosting companies actually deliver. That is your starting point.

Let us then assume that each of the external sources you are using have very good reliability as well, say 99.9 percent here too.

Adding one single external source to your website will make it work 99.9 percent times 99.9 percent of the time, which is 99.8 percent. We have all of a sudden doubled the risk of a faulty website.

Let’s say we create a real super mash-up that uses 10 different external Web services. This brings us down to 98.9 percent! That may not sound so bad, but that is almost eight hours per month when your site will not be functional. So even if all of your different external sources work perfectly 99.9 percent of the time, you get more than ten times the downtime.

Getting the “up” back in mash-up

So, what can you do to maximize the availability of a working mash-up site? What can you do to make it less sensitive to failing external Web services?

- Cache, cache, cache. Many Web service providers will ask you to do this anyway, and you often have a limited number of requests you can make every day. Depending on the type of data you have, cached information can also work as a fallback when the regular Web service is not responding.

- Redundancy. Is some of your data available from multiple sources? Use that as a fallback in case one of them doesn’t work.

- Be graceful. Don’t let your website freeze or just deliver an error message if you are unable to access a Web service. Try to have some default data you can use. If the information has to be real time, then at least be clear and inform your visitor why your site is not working.

As the use of Web services becomes more widespread and relied upon, this will also place new demands on the Web service providers to provide a stable service. Even today, if Google Maps stops working, thousands of dependent websites will stop with it.

P.S. Yes, the math was simplified, but the numbers are just there to make a point. We were quite optimistic. Do the calculations with 99.8 percent instead and you end up with almost 16 hours of downtime per month. Starting with 99 percent would give us more than three days of downtime. “Downtime” in this case meaning a non-working website.